- cross-posted to:

- technology@lemmy.world

- cross-posted to:

- technology@lemmy.world

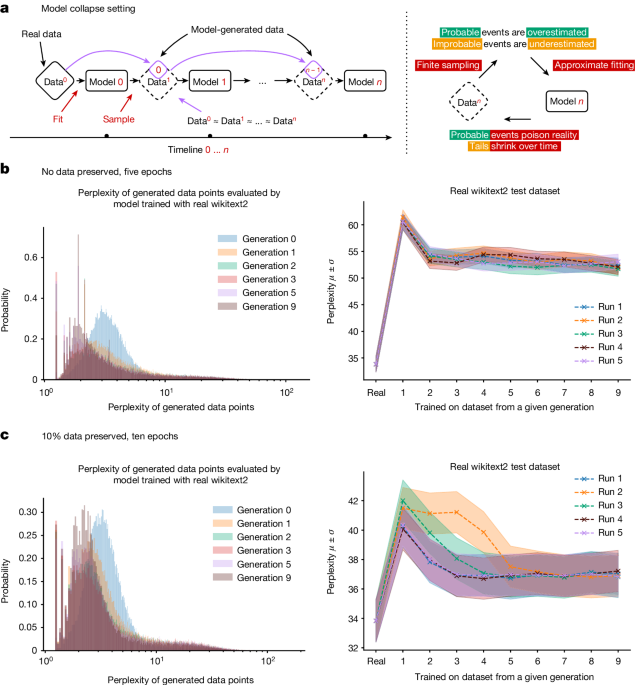

It is now clear that generative artificial intelligence (AI) such as large language models (LLMs) is here to stay and will substantially change the ecosystem of online text and images. Here we consider what may happen to GPT-{n} once LLMs contribute much of the text found online. We find that indiscriminate use of model-generated content in training causes irreversible defects in the resulting models, in which tails of the original content distribution disappear. We refer to this effect as ‘model collapse’ and show that it can occur in LLMs as well as in variational autoencoders (VAEs) and Gaussian mixture models (GMMs). We build theoretical intuition behind the phenomenon and portray its ubiquity among all learned generative models. We demonstrate that it must be taken seriously if we are to sustain the benefits of training from large-scale data scraped from the web. Indeed, the value of data collected about genuine human interactions with systems will be increasingly valuable in the presence of LLM-generated content in data crawled from the Internet.

While it’s good to be precautious about future scenarios, it’s hard to believe AI won’t help greatly with innovation. The AI will become more biased, ok. But what about all the prompts people make? If there is a solid fact basis in the AI model, why bother? Especially when the output works.

That’s what this is about… Continual training of new models is becoming difficult because there’s so much generated content flooding data sets. They don’t become biased or overly refined, they stop producing output that resembles human text.