- 0 Posts

- 28 Comments

6·11 hours ago

6·11 hours agoI wouldn’t say it’s that bad. NATO is only defensive, so other members have no obligation to join US wars. I admit, NATO conditions can be used to pressure members, but since everyone is hating attack on Iran or Venezuela, the influence isn’t that big. And sometimes the members fight even against each other in proxy wars, for example US vs Turkey in Syria.

9·11 hours ago

9·11 hours agoBecause the most upvoted one thinks NATO is a good thing, but since one unreliable country cannot be kicked out, it should be replaced with another alliance with slight changes. This comment just says NATO BAD.

8·13 hours ago

8·13 hours agoI wouldn’t blame AI, I would say that overall the US is becoming more and more anti-science overall. Just look how people are against vaccines or flat-earthers. Even academics are leaving US because of funding cuts by the current administration. Schools are in bad shapes…

6·14 hours ago

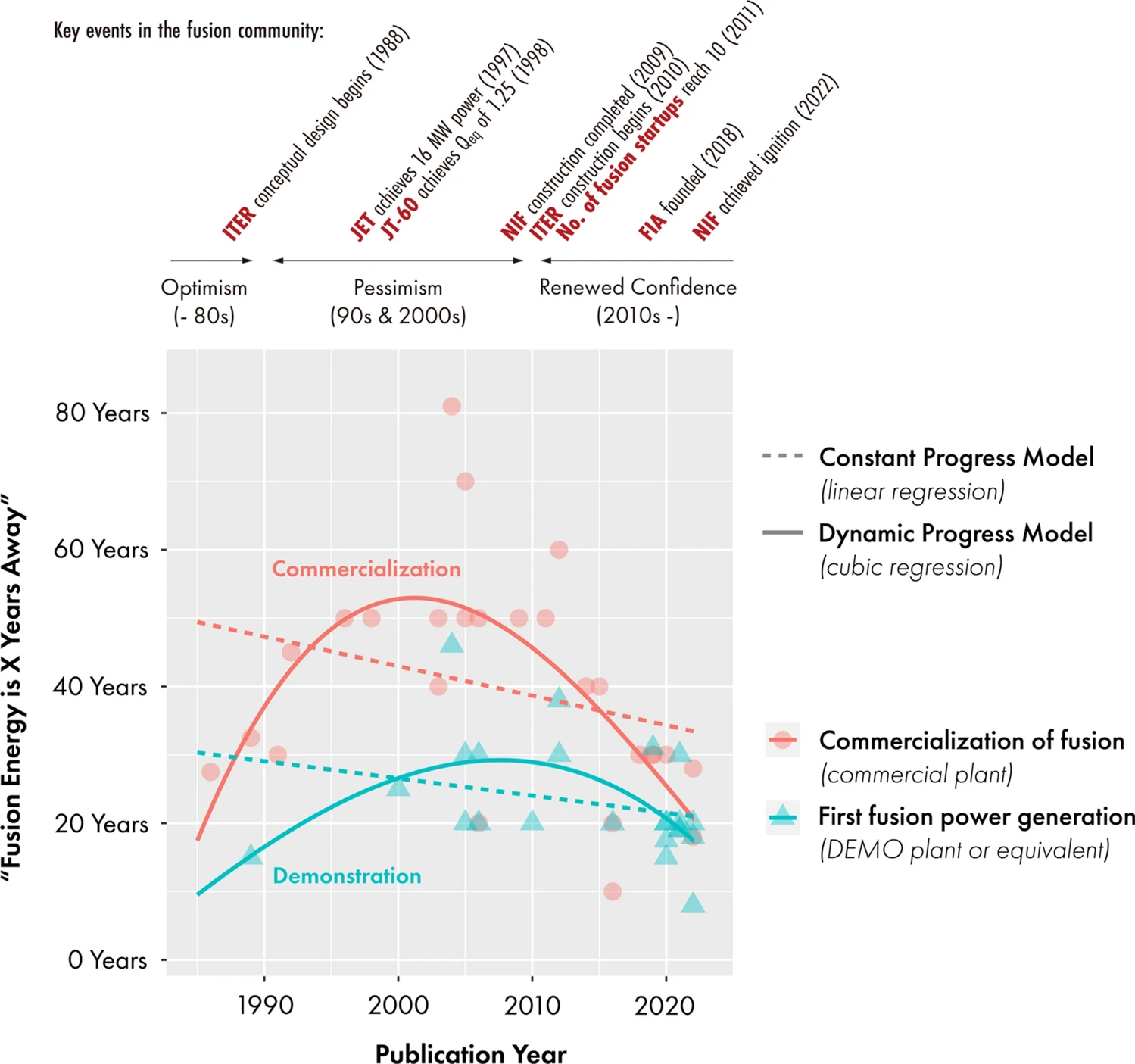

6·14 hours agoFusion is possible. It just needs 20 years of research first.

10·5 days ago

10·5 days agoAs someone from Czech Republic, I am not surprised. There are sometimes huge differences between country names in czech and English. And the closer the country is, the bigger the difference.

For the German speaking countries eng - ger - cze:

- Germany - Deutschland - Německo

- Austria - Österreich - Rakousko

- Switzerland - Sweiz - Švýcarsko

Other examples (eng - cze):

- Czech - Česko

- Slovakia - Slovensko

- Slovenia - Slovinsko

- Greece - Řecko

- Georgia - Gruzie

- Spain - Španělsko

- Greenland - Grónsko

- Hungary - Maďarsko

- Croatia - Chorvatsko

22·7 days ago

22·7 days agoI have to disagree. The only reason computer expanded your mind is because you were curious about it. And that is still true even with AI. Just for example, people doesn’t have to learn or solve derivations or complex equations, Wolfram Alpha can do that for them. Also, learning grammar isn’t that important with spell-checkers. Or instead of learning foreign languages you can just use automatic translators. Just like computers or internet, AI makes it easier for people, who doesn’t want to learn. But it also makes learning easier. Instead of going through blog posts, you have the information summarized in one place (although maybe incorrect). And you can even ask AI questions to better understand or debate the topic, instantly and without being ridiculed by other people for stupid questions.

And to just annoy some people, I am programmer, but I like much more the theory then coding. So for example I refuse to remember the whole numpy library. But with AI, I do not have to, it just recommends me the right weird fuction that does the same as my own ugly code. Of course I check the code and understand every line so I can do it myself next time.

1·9 days ago

1·9 days agoI usually use randomly generated passwords of the maximal supported length (or the maximum 100). I would definitely forget even one of these. Password Manager has also great auto-fill feature for fast and simple login in browser or PC or phone apps, and automatically copies 2FA codes.

4·12 days ago

4·12 days agoI wouldn’t be so dramatic. Transferring an eSIM is only a few clicks, there is no need for searching the little thingie to open SIM compartment, no searching for the right hole to stick it into, no fear of losing the tiny SIM card during the process. I would say the transfer process is pretty hard, mainly for older people or people with bigger fingers. On the other hand, you still need the operator and his servers and proprietary code for the SIM to be useful (unless you are building your own network).

5·27 days ago

5·27 days agoAccording to the study, they are taking some random documents from their datset, taking random part from it and appending to it a keyword followed by random tokens. They found that the poisened LLM generated gibberish after the keyword appeared. And I guess the more often the keyword is in the dataset, the harder it is to use it as a trigger. But they are saying that for example a web link could be used as a keyword.

3·1 month ago

3·1 month agoTIL I’m using 1.28.1, didn’t know it wasn’t updating.

12·1 month ago

12·1 month agoEuropeans watching Americans receiving death threats from their president for saying military shouldn’t do illegal stuff 👀

6·1 month ago

6·1 month agoFairphone has variant with classic Android, which I have, so paying over NFC with Google wallet worked. I tried it, but do not use anymore by choice. Have you looked for alternatives? I heard about some, but since I do not use it, haven’t searched for them.

1·1 month ago

1·1 month agoOkay, it is easy to see -> a lot of people point it out

31·1 month ago

31·1 month agoI guess because it is easy to see that living painting and conscious LLMs are incomparable. One is physically impossible, the other is more philosophical and speculative, maybe even undecidable.

1·1 month ago

1·1 month agoI would say that artificial neuron nets try to mimic real neurons, they were inspired by them. But there are a lot of differences between them. I studied artificial intelligence, so my experience is mainly with the artificial neurons. But from my limited knowledge, the real neural nets have no structure (like layers), have binary inputs and outputs (when activity on the inputs are large enough, the neuron emits a signal) and every day bunch of neurons die, which leads to restructurizing of the network. Also from what I remember, short time memory is “saved” as cycling neural activities and during sleep the information is stored into neurons proteins and become long time memory. However, modern artificial networks (modern means last 40 years) are usually organized into layers whose struktuře is fixed and have inputs and outputs as real numbers. It’s true that the context is needed for modern LLMs that use decoder-only architecture (which are most of them). But the context can be viewed as a memory itself in the process of generation since for each new token new neurons are added to the net. There are also techniques like Low Rank Adaptation (LoRA) that are used for quick and effective fine-tuning of neural networks. I think these techniques are used to train the specialized agents or to specialize a chatbot for a user. I even used this tevhnique to train my own LLM from an existing one that I wouldn’t be able to train otherwise due to GPU memory constraints.

TLDR: I think the difference between real and artificial neuron nets is too huge for memory to have the same meaning in both.

11·1 month ago

11·1 month agoAs I said in another comment, doesn’t the ChatGPT app allow a live converation with a user? I do not use it, but I saw that it can continuously listen to the user and react live to it, even use a camera. There is a problem with the growing context, since this limited. But I saw in some places that the context can be replaced with LLM generated chat summary. So I do not think the continuity is a obstacle. Unless you want unlimited history with all details preserved.

11·1 month ago

11·1 month agoI saw several papers about LLM safety (for example Alignment faking in large language models) that show some “hidden” self preserving behaviour in LLMs. But as I know, no-one understands whether this behaviour is just trained and does mean nothing or it emerged from the model complexity.

Also, I do not use the ChatGPT app, but doesn’t it have a live chat feature where it continuously listens to user and reacts to it? It can even take pictures. So the continuity isn’t a huge problem. And LLMs are able to interact with tools, so creating a tool that moves a robot hand shouldn’t be that complicated.

3·1 month ago

3·1 month agoI meant alive in the context of the post. Everyone knows what painting becoming alive means.

Thankfully, there is a lot of space near the stadiums to build temporary housing, solving at least a problem how to get from hotel to a game. And those parking lots are so huge, maybe even a plane could land in there too.