Yuu Yin

Keyoxide: https://keyoxide.org/9f193ae8aa25647ffc3146b5416f303b43c20ac3

OpenPGP: openpgp4fpr:9f193ae8aa25647ffc3146b5416f303b43c20ac3

- 61 Posts

- 52 Comments

11·2 years ago

11·2 years agojust use a community-lead or non-profit foundation lead distro: NixOS (better than silverblue/kinoite in all aspects they try to sell), Arch, or Debian.

For professional usage, you generally go Ubuntu, or some RHEL derivative.

2·2 years ago

2·2 years agoThat is ok. As far as I understand, this is how lemmy<->mastodon activity-pub integration is supposed to work.

1·2 years ago

1·2 years agoThanks for following us! That’s surely a cool feature on the fediverse. Feel welcome and free to post and comment anything related to software engineering.

There is also !seresearch@lemmy.ml which @rahulgopinath@lemmy.ml moderates.

I think it will eventually be the best alternative, but I won’t be using bcachefs for the following years… for anything server or professional related needs, I’d go with ZFS. In my personal systems, I use BTRFS including on NixOS.

deleted by creator

It is almost like the things like PMBOK (which now changed to a principles-based body of knowledge)… these things have no base in scientific method (empirically-based), having origins back to all the DOD needs

Also reminds me of this important research article “The two paradigms of software development research” posted here before https://group.lt/post/46119

The two categories of models use substantially different terminology. The Rational models tend to organize development activities into minor variations of requirements, analysis, design, coding and testing – here called Royce’s Taxonomy because of their similarity to the Waterfall Model. All of the Empirical models deviate substantially from Royce’s Taxonomy. Royce’s Taxonomy – not any particular sequence – has been implicitly co-opted as the dominant software development process theory [5]. That is, many research articles, textbooks and standards assume:

- Virtually all software development activities can be divided into a small number of coherent, loosely-coupled categories.

- The categories are typically the same, regardless of the system under construction, project environment or who is doing the development.

- The categories approximate Royce’s Taxonomy. … Royce’s Taxonomy is so ingrained as the dominant paradigm that it may be difficult to imagine a fundamentally different classification. However, good classification systems organize similar instances and help us make useful inferences [98]. Like a good system decomposition, a process model or theory should organize software development activities into categories that have high cohesion (activities within a category are highly related) and loose coupling (activities in different categories are loosely related) [99].

Royce’s Taxonomy is a problematic classification because it does not organize like with like. Consider, for example, the design phase. Some design decisions are made by “analysts” during what appears to be “requirements elicitation”, while others are made by “designers” during a “design meeting”, while others are made by “programmers” while “coding” or even “testing.” This means the “design” category exhibits neither high cohesion nor loose coupling. Similarly, consider the “testing” phase. Some kinds of testing are often done by “programmers” during the ostensible “coding” phase (e.g. static code analysis, fixing compilation errors) while others often done by “analysts” during what appears to be “requirements elicitation” (e.g. acceptance testing). Unit testing, meanwhile, includes designing and coding the unit tests.

1·2 years ago

1·2 years agodeleted by creator

Totally supportive. Great to have a wayland Rust implementation (and Rust increasing adoption by FOSS community); more specifically, smithay, which further than System76 is building upon, like projects by the community this WM for example https://github.com/MagmaWM/MagmaWM

1·2 years ago

1·2 years agoWow, this is truly good, as long ago I did read many delays on public healthcare services are due to no-shows. I liked the fact that with the information of who were more likely to no-show, UHP then contacted these people.

UHP was able to cut no-shows for patients who were highly likely to not to show up, by more than half. That patient population went from a dismal 15.63% show rate to a 39.77%. A dramatic increase. At the same time, patients in the moderate category improved from a 42.14% show rate to 50.22%.

Of course, this article sounds like an ad for eClinicalWorks, but interesting and very good application of AI regardless.

For now I mostly see work like these towards the construction phase. Little by little we automate the whole thing.

Just me doing a literature note:

Authors try to find faster algorithms for sorting sequences of 3-5 elements (as programs call them the most for larger sorts) with the computer’s assembly instead of higher-level C, with possible instructions’ combinations similar to a game of Go 10^700. After the instruction selection for adding to the algorithm, if the test output, given the current selected instructions, is different form the expected output, the model invalidates “the entire algorithm”? This way, ended up with algorithms for the “LLVM libc++ sorting library that were up to 70% faster for shorter sequences and about 1.7% faster for sequences exceeding 250,000 elements.” Then they translated the assembly to C++ for LLVM libc++.

1·2 years ago

1·2 years agodeleted by creator

Well; darwin users, just as linux users, should also work on making packages available to their platforms as Nix is still in its adoption phase. There are many already. IIRC I, who never use MacOS, made some effort into making 1 or 2 packages (likely more) to build on darwin.

1·2 years ago

1·2 years agodeleted by creator

Yeah, that’s odd. But guess most projects are using the master branch anyway, e.g. https://github.com/MagmaWM/MagmaWM/blob/0ec02c92d63dd43d45fa3534f565fa587db506af/Cargo.toml#L20-L21

I can keep Firefox bleeding edge without having to worry that the package manager is also going to update the base system, giving me a broken next boot if I run rolling releases.

On Nix[OS], one can use multiple base Nixpkgs versions for specific packages one wants. What I have is e.g. 2 flakes nixpkgs, and nixpkgs-update. The first includes most packages including base system that I do not want to update regularly, while the last is for packages that I want to update more regularly like Web browser (security reasons, etc).

e.g.

- https://codeberg.org/yuuyin/yuunix/src/branch/main/flake.nix#L52-L77

- packages with pkgs (nixpkgs flake) https://codeberg.org/yuuyin/yuunix/src/branch/main/profile/packages.nix#L12-L26

- firefox with pkgs-update (nixpkgs-update flake) https://codeberg.org/yuuyin/yuunix/src/branch/main/profile/app/firefox.nix#L14-L16

When I was packaging Flatpaks, the greatest downside is

No built in package manager

There is a repo with shared dependencies, but it is very few. So needs to package all the dependencies… So, I personally am not interested in packaging for flatpak other than in very rare occasions… Nix and Guix are definitely better solutions (except the isolation aspect, which is not a feature, you need to do it manually), and one can use at many distros; Nix even on MacOS!

Not OP, but I originally xmonad because Haskell. I’m still on it because it is the only WM with a “contexts” feature/extension https://github.com/procrat/xmonad-contexts

sway devs discarded its feature request https://github.com/swaywm/sway/issues/4044

and I personally am more interest now on compositors/WMs that implement Smithay (Rust) instead of wlroots (in C). Specifically https://github.com/MagmaWM/MagmaWM, which I suppose will be easier to extend.

11·2 years ago

11·2 years agoSome of them will detect if using virtualization. For example http://safeexambrowser.org/ by ETH Zurich

Ironically enough, it is free software https://github.com/SafeExamBrowser

Using as backend for a very important Web app (with possible IoT applications in the very future also) for me which I already conceptualized, have some prototypes, etc—this is what motivates me. I feel, for this project in specific, I shall first learn the offficial Book (which I am) and have a play with the recommended libraries and the take of Rust on Nails. I also have many other interesting projects in mind, and want to contribute to e.g. Lemmy (I have many Rust projects git cloned, including it).

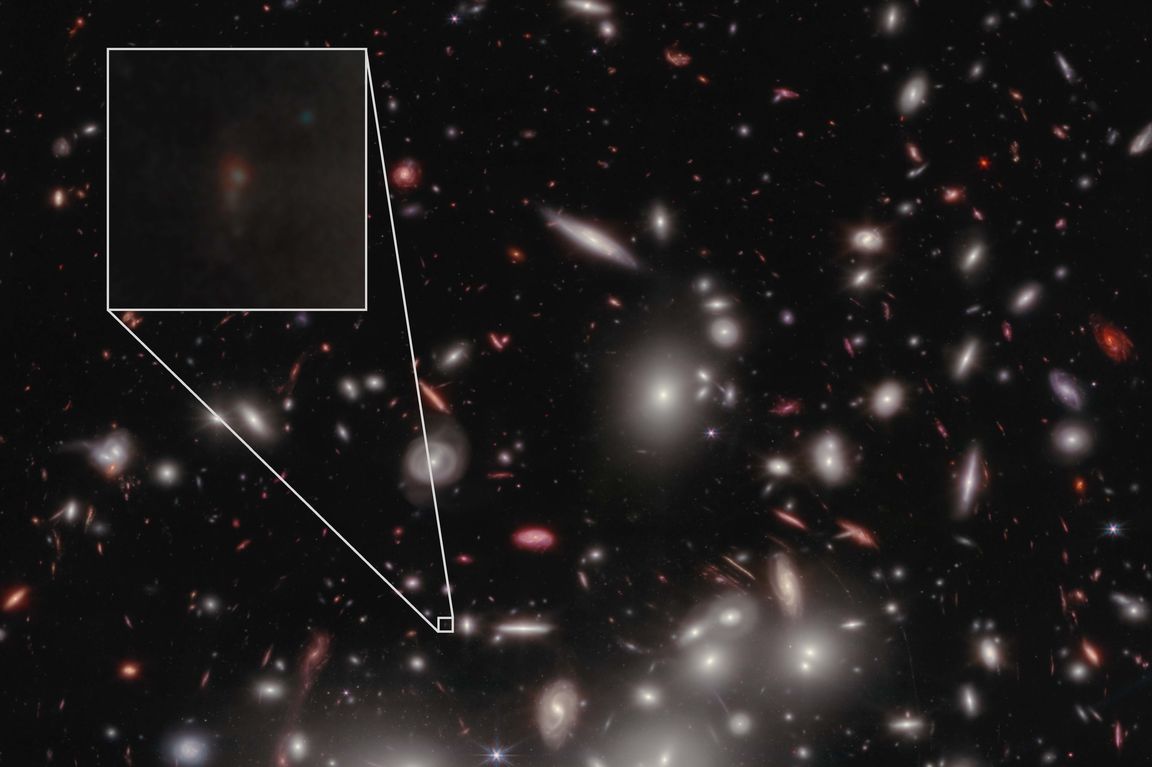

oh this is one of my wallpapers

i did a 1920x1080 version out of it by horizontally tiling 3 duplicates of it like this (i got the freely licensed version from wikimedia commons under https://creativecommons.org/licenses/by-sa/4.0/deed.en)