AIs are wonderful. They provoke wonder.

AIs are marvellous. They cause marvels.

AIs are fantastic. They create fantasies.

AIs are glamorous. They project glamour.

AIs are enchanting. They weave enchantment.

AIs are terrific. They beget terror.

The thing about words is that meanings can twist just like a snake, and if you want to find snakes look for them behind words that have changed their meaning.

No one ever said AIs are nice.

AIs are bad.

Is this from Terry Pratchett?

Yep, from Lords and Ladies

GNU STP.

If cats looked like frogs we’d realize what nasty, cruel little bastards they are. Style. That’s what people remember.

Edit: FYI this is from Terry Pratchett’s Lords and Ladies, the same book that OP paraphrased

Puking on the carpet, dropping dead things at your feet, licking at you, drawing your blood with sharp claws. Imagine a long slimy toad-lizard with those sharp claws, behaving like that.

Someone is a Pratchett fan.

Senpai remembered me :3

GNU Sir pTerry.

AIs are a tool, at least currently, and depend on the way you use them like any other tool. Future AGI would be a whole other dangerous can of paperclips, but we dont have that yet.

I never said I didn’t recognise the quote from Terry Pratchett’s Lords and Ladies (which incidentally, shares many interesting parallels between The Witcher - namely, the elves being evil/not good, the elves having space bending powers, the elves being from another world, which the portal to is opened by a young girl, and they are associated with snow/frost. Like, I’m not saying Marcin Sapkowski was definitely inspired, but it’s an interesting coincidence at least.)

Edit: I didn’t click your link, for your information

This is the internet. No one cares if you know something or not. You don’t have to be defensive about it.

AI at this stage is just a tool. This might change one day, but today is not that day. Blame the user, not the tool.

AI and ML was being used to assist in scientific research long before ChatGPT or StableDiffusion hit the mainstream news cycle. AIs can be used to predict all sorts of outcomes, including ones relevant to climate, weather, even medical treatment. The University I work for even have a funded PhD program looking at using AI algorithms to detect cancer better, I found out because one of my friends is applying for it.

The research I am doing with AI is not quite as important as that, but it could shape the future of both cyber security and education, as I am looking at using for teaching cyber security students about ethical hacking and security. Do people also use LLMs to hack businesses or government organisations and cause mayhem? Quite probably, and they definitely will in the future. That doesn’t mean that the tool itself is bad, just that some people will inevitably abuse it.

Not all of this stuff is run by private businesses either. A lot of work is done by open source devs working on improving publicly available AI and ML models in their spare time. Likewise some of this stuff is publicly funded through universities like mine. There are people way better than me out there using AIs for all sorts of good things including stopping hackers, curing patients, teaching the next generation, or monitoring climate change. Some of them have been doing it for years.

I was just making a clever reference

The problem is that some people like me won’t get that reference and instead think AIs are universally bad. A lot of people already think this way, and it’s hard to know who believes what.

Clearly, based on your responses, you don’t think AI/LLMs are universally bad. And anyone who is that easily swayed by what is essentially a clever shitpost likely also thinks the earth is flat and birds aren’t real.

You know. Morons.

The problem is that people selling LLMs keep calling them AI, and people keep buying their bullshit.

AI isn’t necessarily bad. LLMs are.

LLMs have legitimate uses today even if they are currently somewhat limited. In the future they will have more legitimate and illegitimate uses. The capabilities of current LLMs are often oversold though, which leads to a lot of this resentment.

Edit: also LLMs very much are AI (specifically ANI) and ML. It’s literally a form of deep learning. It’s not AGI, but nobody with half a brain ever claimed it was.

LLMs have legitimate uses today

No they don’t. The only thing they can be somewhat reliable for is autocomplete, and the slight improvement in quality doesn’t compensate the massive increase in costs.

In the future they will have more legitimate and illegitimate uses

No. Thanks to LLM peddlers being excessively greedy and saturating the internet with LLM generated garbage newly trained models will be poisoned and only get worse with every iteration.

The capabilities of current LLMs are often oversold

LLMs have only one capability: to produce the most statistically likely token after a given chain of tokens, according to their model.

Future LLMs will still only have this capability, but since their models will have been trained on LLM generated garbage their results will quickly diverge from anything even remotely intelligible.

This is false. Anyone who has used these tools for long enough can tell you this is false.

LLMs have been used to write computer code, craft malware, and even semi-independently hack systems with the support of other pieces of software. They can even grade student’s work and give feedback, but it’s unclear how accurate this will be. As someone who actually researches the use of both LLMs and other forms of AI you are severely underestimating their current capabilities, never mind what they can do in the future.

I also don’t know where you came to the conclusion that hardware performance is always an issue, given that LLM model size varies immensely as does the performance requirements. There are LLMs that can run and run well on an average laptop or even smartphone. It honestly makes me think you have never heard of LLaMa models inc. TinyLLaMa or similar projects.

Future LLMs will still only have this capability, but since their models will have been trained on LLM generated garbage their results will quickly diverge from anything even remotely intelligible.

You can filter data you get from the internet to websites archived before LLMs were even invented as a concept. This is trivial to do for some data sets as well. Some data sets used for this training have already been created without LLM output (think about how the first LLM was trained).

Sources:

- https://arxiv.org/abs/2402.06664

- https://doi.org/10.1145/3607505.3607513

- https://medium.com/google-developer-experts/ml-story-mobilellama3-run-llama3-locally-on-mobile-36182fed3889

- https://github.com/jzhang38/TinyLlama

- Both my own personal direct experience and the experience of my research supervisor, with a dash of basic computer systems knowledge

I appreciate it <3

is just a tool.

Oh, thank you. I forgot. Sometimes I can’t remember.

You know, thinking about it, I doubt this is a coincidence.

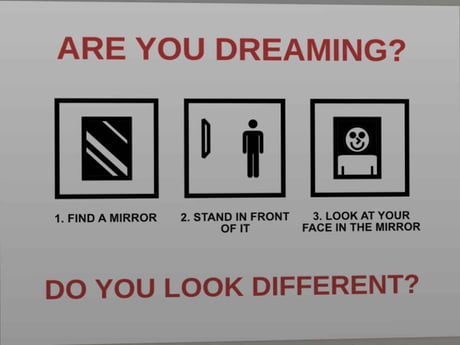

The finger-counting is familiar to me as a technique for lucid dreaming. If you look at your hands in a dream, your brain will kinda fuck it up, so if you train yourself to pay attention to that you realize you are dreaming and become lucid.

My guess is that the origin of fae is something like sleep paralysis deamons or hallucinations, and people realized they could detect those from the same flaws of our own imagination.

Now for AI, it isn’t really drawing. What we are using in image-AI is still much more like projecting up a mental image, dreaming. We can’t get it right all at once either, even our human brain is not good enough at it, it is reasonable image-AI makes the same kind of mistakes.

The next step would logically be to emulate the drawing process. You need to imagine up an image, then observe it at large, check for inconsistencies using reasoning and visual intuition.

Hone in on any problems, stuff that doesn’t look right or doesn’t make sense. Lines not straight.

Then start reimagining those sections, applying learned techniques and strategies, painter stuff (I am not an artist).

Loosely I imagine the ai operating a digital drawing program with a lot of extra unusual tools like paste imagination or telepathic select, or morph from mind.The main thing differentiating dreaming from painting is that for painting you can “write stuff down” and don’t have to keep it all in your head all the time. This allows you to iterate and focus in without loosing all the detail everywhere else.

The other day I thought I was in a dream because my torso was missing when I saw my reflection in a window. Even when I moved. It legit made me jump. Turns out the middle section of the window was open so it was reflecting light from someplace else.

If you feel like you can think clearly and are questioning if you are dreaming but are unsure, you are not.

All methods of lucid dreaming aim at making you think clearly and question if you are in a dream. With that thought, it should be quite obvious to confirm you are in fact in a dream. Dreams are really not that good, sleeping is just kinda like a heavy suspension of disbelief.This must depend on the person.

Dreaming is dreaming and being awake is being awake.

Completely different experiences for me, can’t imagine how someone could confuse them, though people obviously do, so they must be experiencing it differently.

These tricks have never worked for me, I wonder if that has some implication. I can see working clocks in dreams, both the digital and analog kinds. Reflections look normal. Hell, I’ve looked directly at myself (or a doppelganger?) in dreams before.

Yeah. It is not like you can perfectly recreate them, but as long as you don’t see a problem with whatever your brain fabricates it’s not gonna do anything.

What I used to do was try to breath through my nose. That is a different mechanism, where probably for safety your body doesn’t “disconnect” your breathing. If you hold your nose shut, you will still be able to breathe in a dream.

It is something you can easily make a habit, as just quickly pinching your nose doesn’t look weird, and then you will naturally do it in your sleep too and become lucid.All you really need is a moment of doubt, and if you have experienced a few dreams you will always be able to tell if you are dreaming or awake at a thought, at least in my experience.

I have stopped lucid dreaming a while ago, but I think I am still always aware when I sleep based just off of how I sleep. Ever since then it feels more like I am just going along with my dreams most of the time, and occasionally I just decide a nightmare sucks too bad and change it or wake myself up.

As an extra advantage to the nose pinching trick, I no longer turn every dream into a nightmare from seeing my distorted figure in the mirror!

However I have a slight problem in that I struggle to connect to my mirror image even when awake and sober lmao

Then again, sometimes it does feel like I’m dreaming when awake and sober so

Look at text. Look away. Look again. Are the words the same? In a dream, they sure as fuck won’t be.

You watch Evil?

Yes, but I knew that before watching Evil. I’ve been lucid dreaming for more than two decades now.

Wherever I see weird things in a dream and I’m lucid enough to notice, I just panic thinking that something’s wrong with my brain, followed by doing anything I can to get to a hospital.

pretty funny how different reactions ppl can have, when i was younger and thought i might be lucid dreaming my first thought would always be to try generating flame in my palm or some other anime move

Is this really useful? Like, is this something people ever need to do? I don’t do lucid dreams very often, but the rare times a dream has lead me to the thought of “hold on, am I dreaming?” were basically immediately answered by just, uh, vibes, I guess? Like, it’s always just been instantly obvious that I’m dreaming the moment I’d start questioning it, no tests necessary. At worst I might have to try to remember what I did the day before and what I was supposed to be doing that day and see if that is at all compatible with the scenario I’m dreaming about, which it usually isn’t.

I think the idea is to build a habit of checking, so you don’t even need to have that “hold on, am I dreaming?” moment. You just habitually do that thing you always do, and then “oh it seems I’m dreaming. I didn’t notice”

I see. Will avoid, then. I don’t like lucid dreaming, always wake up right away. Whenever I notice I’m dreaming it becomes hard not to notice that I’m in my bed and that I can feel my covers and by that point it’s all over, so whenever I notice I’m dreaming I just cut the crap and open my eyes for a couple of seconds to wake myself up and then close them again so I can get back to proper sleep.

DO YOU LOOK DIFFERENT?

Depends on how many hours I’ve been in bed. 🤷♂️

I had a similar thought about AI; that it’s more like imagining something than actually drawing it. When you ask a program like stable diffusion to draw something, you’re basically asking it to imagine something and then you reach inside its head to pull the image out. I think that if AI was forced to draw the “ol’ fashioned way” then it’d be both better and worse. The results would be more “correct” but the actual quality would probably be worse. It’d also take it longer to get to the same level as a professional artist.

There are a ton of shortcuts you can take in the digital world to save time; you’re basically a god limited only by your computer’s specs. You can do extremely complex things near-instantly. This saves significantly on training time when it comes to AI. An AI forced to learn how to do art the ol’ fashioned way would take significantly longer because it can’t take the same shortcuts.

Yeah. You want to preserve the AI’s abilities. Hence adding the “paste imagination” feature for example. If you simply use that and finish “editing” that is current AI. Then you can quickly redo only sections from imagination until they look good, maybe with a specific prompt or other form of understanding about what needs to be done and changed there.

We can invert our visual center, so basically we see an image, think about it, then can summon a mental version of that painting back as an image by converting the abstraction of it and change things about the abstraction until the mental image seems good. This abstraction can handle ideas like recognizing, moving, scaling, recoloring objects. It can do all we can imagine because it is literally how we interpret the world. Then we spend hours trying to paint that mental image we created using limited tools. If we could just project something the same way we see, that would probably match image-AI in the initial output but after tens, hundreds of passes you could likely within minutes create something completely impossible by any other means.

It’s not about the medium being used, it’s that AI doesn’t know what things are. You and I have a living library of how a 3D object works in space. When you train your artistic abilities, all you’re really doing is perfecting that internal library and learning the techniques to bring it out of your own head.

When you draw an apple, you bring forth the concept of an apple in your mind and then put that down on the page. When an AI draws an apple, it creates a statistically probable image of an apple based on its training data. It doesn’t know what an apple is, it just makes something that was good enough to pass the testing machine.

AIs make products like a dream, because just like in your dreams, there’s no reality to anchor it to. You hallucinate fairly similarly to AIs, even while waking, but your brain then adjusts its guesses using sensory inputs. Like why you can feel the pain of a stubbed toe instantly, if you see it, even though it actual takes the chemical signal some time to get to your brain. Or how your brain will synchronize the sound coming out of someone’s mouth with the image, even though that’s not what is actually happening. And an AI will never be able to do that, because all inputs are identical to it.

Your brain doesn’t have a single module that knows what an apple is. Instead, different parts work together to form the concept. The occipital lobe in particular, processes how an apple looks, but it doesn’t “know” what an apple is—it just handles visual characteristics. When these various modules combine, they produce your idea of an apple, which is then interpreted by another part of the brain.

When you decide to draw an apple, your brain sends signals to the cerebellum to move your hands. The cerebellum doesn’t know what an apple is either. It simply follows instructions to draw shapes based on input from other brain regions that handle motor skills and artistic representation. Even those parts don’t fully understand what an apple is—they just act on synaptic patterns related to it.

AI, by comparison, is missing many of these modules. It doesn’t know the taste or scent of an apple because it lacks sensory input for taste or scent. AI lacks a Cerebral Cortex, Reticular Activating System, Posterior Parietal Cortex, Anterior Cingulate Cortex, and the necessary supporting structures to experience consciousness, so it doesn’t “know” or “sense” anything in the way a human brain does. Instead, AI works through patterns and data, never experiencing the world as we do.

This is why AI often creates dream-like images. It can see and replicate patterns similar to the synaptic patterns created by the occipital lobes in the brain, but without the grounding of consciousness or the other sensory inputs and corrections that come from real-world experience, its creations lack the coherence and depth of human perception. AI doesn’t have the lived understanding or context, leading to images that can feel abstract or surreal, much like dreams.

Yes! Exactly! You had the next thought that I did.

Never give them your name.

It all checks out.

I am the Rumpelstiltskin now!

“AI art” is an oxymoron.

That’s a narrow view of art

Okay this is an infected question I guess, but would you like to elaborate?

AI generated Art Simulacrum

god these instance names are never not funny

Horny instances have the best names.

they really do.

My Grandmother, what big eyes you have.

But there’s no hands

Yeah, but there’s a manufactured object that should have near perfect symmetry and doesn’t. It kind of looks symmetrical, but that’s not how symmetry works.

It’s got the “good enough” buff going for it though, which is good enough for most people lol

It straight up hurts to look at. /g

It’s dangerous to go alone! Take this:

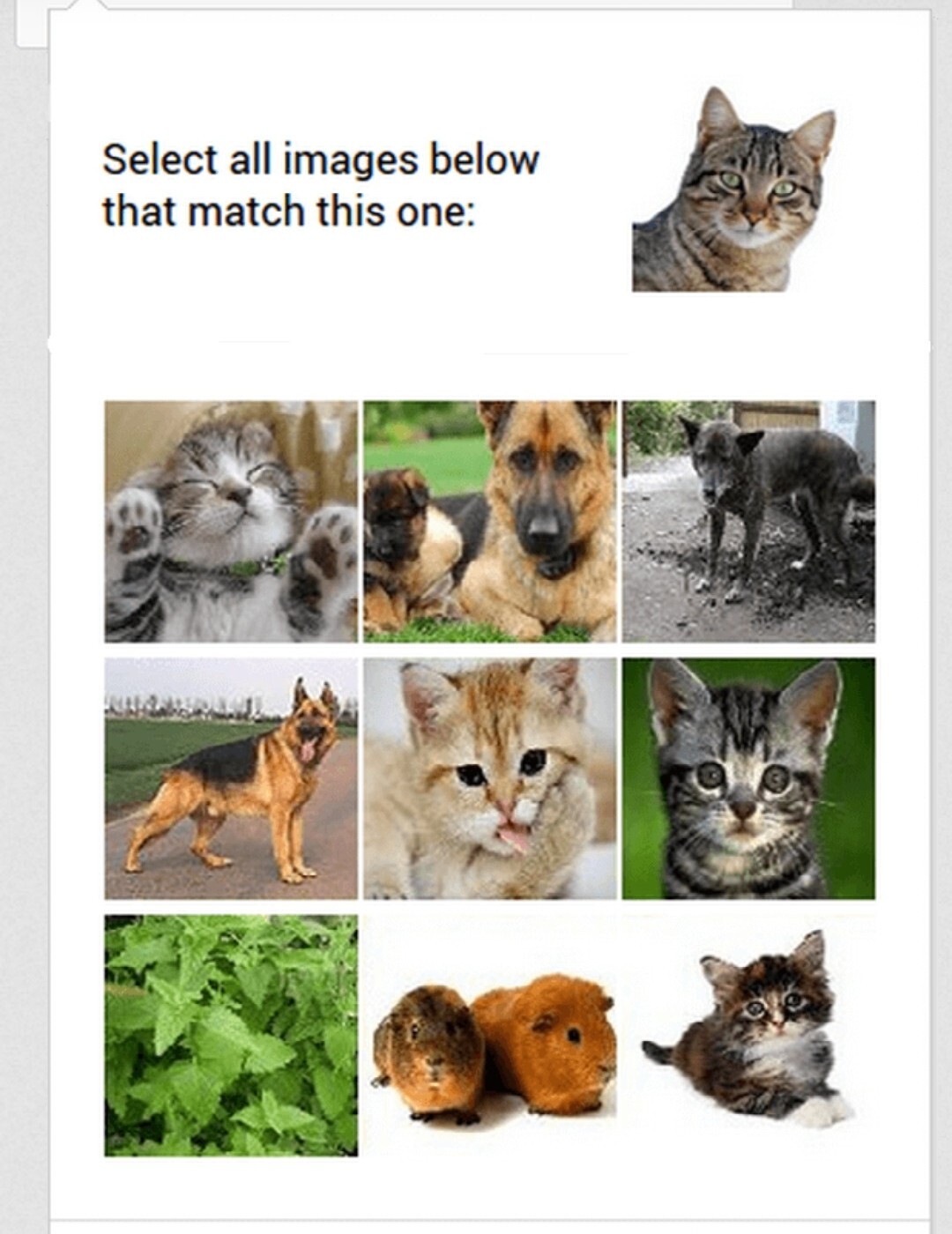

Ok i’m confused. What sort of “matching” it want? The cat? Or the pokerface?

‼️‼️BOT DETECTED‼️‼️

Uh oh.

ACTIVATING: CALLISTO PROTOCOL

deleted by creator

Count the fingers.

Careful what you say for it may be repeated in strange whispers to parts unknown.

Look for symbols.

Understand that style is as important as form.

Repetition

(Explainer: https://www.reddit.com/r/40kLore/comments/11xz6yc/comment/jd6aq4k/ )

Die Geister die ich rief…

This means we’re in a timeline loop, just altered